You have been redirected from an outdated version of the article.

Below is the content available on this topic. To view the old article click here.

Data

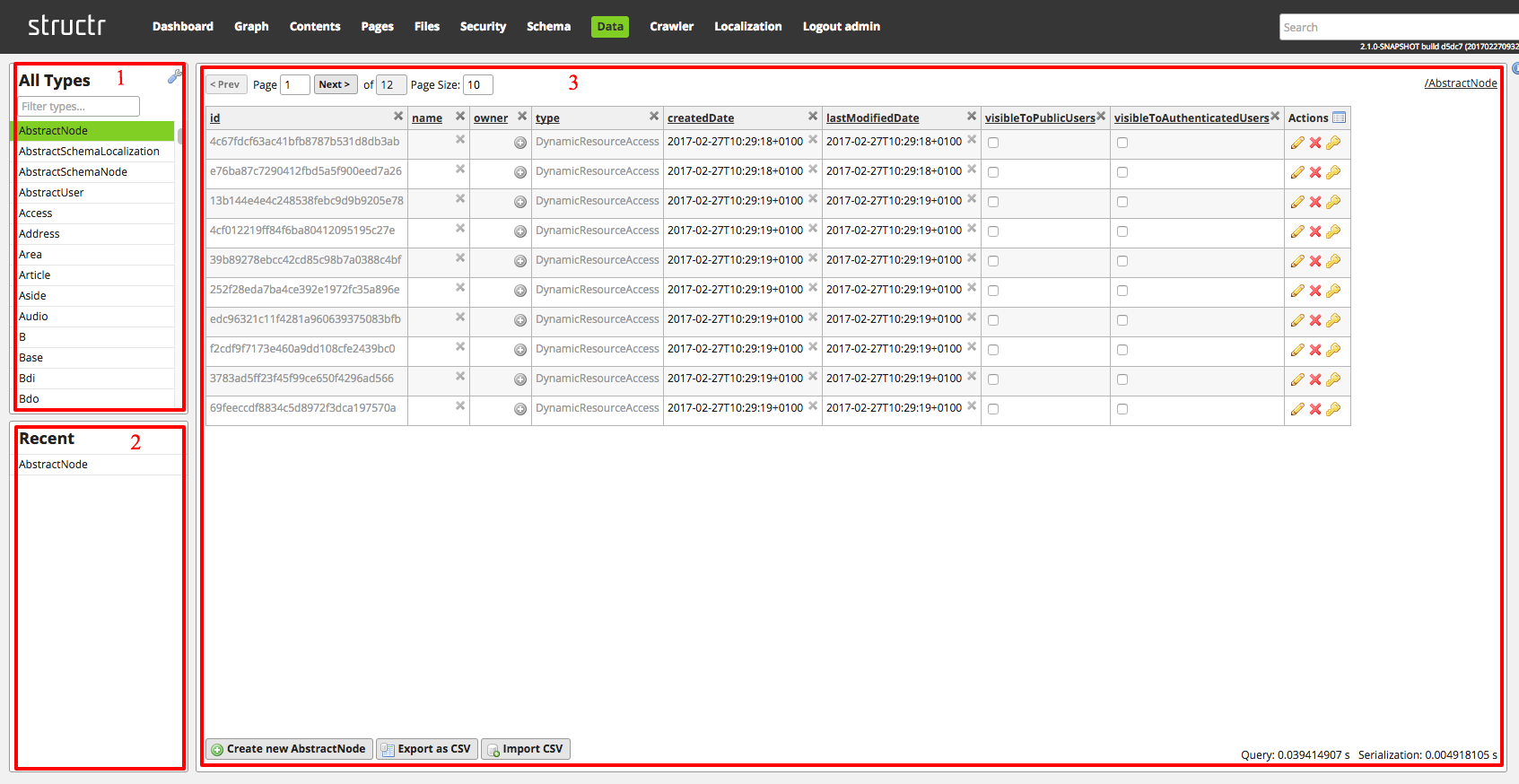

The Data area provides a data editing frontend for any data stored in Structr. For each built-in and custom type configured in Structr, a list of objects can be loaded.

This is sometimes also referred to as the CRUD (Create, Read, Update, Delete) interface of Structr, as it uses the REST API and doesn’t access data via the Websocket connector.

The CRUD is split in three main areas:

- (1) The list of available types (this includes built-in and dynamic types)

- The list can be filtered by entering a search expression in the search box or by clicking the wrench icon

- (2) The list of recently accessed types. Every time a user loads a list view in the CRUD, the type will be inserted into this list of recent types

- Specific entries can be removed from the list by clicking the ‘X’ icon which appears when hovering over the entry

- (3) The list of objects of a certain type

- This view can be customized by using the pager above the table

- Objects of that type can be created by clicking the “Create” button at the bottom

- The CSV functionality (Import/Export) can be accessed by the corresponding buttons at the bottom

Search results for "Data"

data

The data keyword returns the current element in an each() loop iteration or in a filter() expression.

Log messages

The reason for this is that, since version 4.1-SNAPSHOT, structr supports connecting to different neo4j databases. The database name has to be configured though. By default the database name is set to “neo4j” (because that is the default database name since neo4j 4.x). If your application started with an older version of neo4j, then the database name might be “graph.db”. If you have configured a different name, then that name has to be used.

The configuration for this is done in structr.conf. A new configuration line has to be added to the existing configuration.

Let us assume the configuration looks like this:

XXXXXXXXXX.database.connection.name = <a name for your connection>

XXXXXXXXXX.database.connection.password = <your database user password>

XXXXXXXXXX.database.connection.url = <your connection string>

XXXXXXXXXX.database.connection.username = <your database username>

XXXXXXXXXX is the custom prefix in your settings.

The new line would need to match the XXXXXXXXXX and look like this:

XXXXXXXXXX.database.connection.databasename =

Data Flow and Handling

Whenever a Flow Element has a data source socket available, data can be made available to the element. If such an element has a connected data source, it will query data from it’s source before performing it’s own function. The data source itself can also potentially have another data source, which enables data to be recursively constructed from multiple data elements. In case of elements that allow scripting, the acquired data will be made available under the static data key. While this is true for all basic elements, Aggregate is a special case, because it takes two data source inputs and makes them available as data and currentData based on their respective socket.

Aggregate

| Name | Description |

|---|

Prev | Accepts another element’s Next socket |

Next | Connects to another element’s Prev socket |

CurrentData | Accepts another element’s DataSource. Given data is made available as currentData within the scripting context. |

Data | Accepts another element’s DataSource. Given data is start value of the aggregation and used to set it’s initial value. |

DataTarget | Connects to another element’s DataSource socket. Contains the aggregated data of the element. |

ExceptionHandler | If connected to an ExceptionHandler, exceptions thrown in the scripting context will be handled by the referenced handler. |

Script | context used to aggregate the data. Return value will be written to the element’s data. |

Store

| Name | Description |

|---|

Prev | Accepts connection from another element’s Next socket |

Next | Connects to another elements Prev socket |

DataTarget | Connects to element’s DataSource |

DataSource | Accepts connection from another element’s DataTarget |

Operation | Switches between storing or receiving data. When receiving DataSource is ignored and when storing DataTarget is ignored. |

Key | Key under which to store or from which to retrieve data |

Neo4j Upgrade

If the version upgrade is from below Neo4j v4, then it is possible that the default database is called “graph.db” instead of the newer “neo4j” in version 4 and up. Since v4.1 Structr supports selecting a database (if supported by the Neo4j installation it is connecting to). The default database it connects to is the “neo4j” database - if the migration happened from an older version, the correct database name needs to be configured via structr.conf file using the “xxxx.database.connection.databasename” setting as shown in the following example in line 4.

Aggregate

The Aggregate element is used to aggregate data within loops in the Flow Engine. It works similar like reduce functions in other languages. In it’s scripting context the keywords data and currentData become available. Data represents the initial value for the aggregation and currentData contains the dataset of the current loop iteration. The script must then process and return the aggregation result and in the next iteration data will contain the result of the previous aggregation.

Neo4j Upgrade

YOUR_CONFIGURED_DB_NAME.database.connection.url = bolt://localhost:7687

YOUR_CONFIGURED_DB_NAME.database.connection.name = YOUR_CONFIGURED_DB_NAME

YOUR_CONFIGURED_DB_NAME.database.connection.password = your_neo4j_password

YOUR_CONFIGURED_DB_NAME.database.connection.databasename = graph.db

YOUR_CONFIGURED_DB_NAME.database.driver = org.structr.bolt.BoltDatabaseService

Comparison

| Name | Description |

|---|

DataSources | Accepts multiple DataTarget connections. Data that will be compared. |

ValueSource | Accepts DataTarget connection. Value that given data will be checked against. |

Operation | Defines boolean operator to be applied. |

Result | Connects to a logic node DataSource or a Decision Condition |

KeyValue

| Name | Description |

|---|

DataTarget | Connects to a ObjectDataSource’s DataSource. |

DataSource | Accepts another element’s DataTarget. |

Key | Key identifier under which given data will be added to the object |

Create Example Data

Now that we’ve created our project type, we can create database objects of this type by heading over to the Data area by clicking on  in the main menu. Filter the type list in the left column by entering

in the main menu. Filter the type list in the left column by entering Project to get the list of existing projects (should be empty at the moment). Click on the “Create new Project” button ( ) to create a new project. This creates a new entry in the table. The table lists properties of the new project including some system attributes like an

) to create a new project. This creates a new entry in the table. The table lists properties of the new project including some system attributes like an id, name or owner. For now we will only be using the name property which can be set by clicking into the respective cell in the table and typing in the desired name. We create three projects with the names “Project 1”, “Project 2” and “Project 3”.

Accessing the Data

Structr automatically creates REST endpoints from the data model that allow you to read and write data as JSON documents. You can send a JSON document to an endpoint, and Structr automatically creates the corresponding object structure in the database, based on the schema definition in the data model. This works in both directions, so Structr is essentially a document database extension on a graph database.

Database Connections

| Name | The name of this connection, to allow you to easily distinguish multiple configured connections. |

| Driver | Database driver, either Neo4j or Memgraph DB. |

| Connection URL | URL to the remote database. |

| Database Name | Database name to use (available from v4.1). Defaults to neo4j (if the installation start with an earlier version of neo4j, graph.db will be the correct value) |

| Username | Username to login to the database. |

| Password | Password to login to the database. |

| Connect immediately | Controlls if a connection should be established immediately or manually. |

import-data

importData <source> <doInnerCallbacks> <doCascadingDelete> - Imports data for an application from a path in the file system.

<source> - absolute path to the source directory

<doInnerCallbacks> - (optional) decides if onCreate/onSave methods are run and function properties are evaluated during data deployment. Often this leads to errors because onSave contains validation code which will fail during data deployment. (default = false. Only set to true if you know what you're doing!)

<doCascadingDelete> - (optional) decides if cascadingDelete is enabled during data deployment. This leads to errors because cascading delete triggers onSave methods on remote nodes which will fail during data deployment. (default = false. Only set to true if you know what you're doing!)

File Upload

Binary data in form of files can be uploaded to the integrated file system in Structr with a form-data POST request to the upload servlet running on path /structr/upload.

The file to be uploaded has to be put as value for the key file. All other properties defined on the File type can be used to store additional data or link the file directly to an existing data entity in the database.

# example file upload with addional paramter "action" and target directory "parent"

curl --location --request POST 'http://localhost:8082/structr/upload' \

--form 'file=@"/Users/lukas/Pictures/user-example.jpg"' \

--form 'action="ec2947e760bd48b291564ae05a34a3b7"' \

--form 'parent="9aae3f6db3f34a389b84b91e2f4f9761"'

Connecting flow elements

Now that our Action contains a script, we want it to return its result. For this to happen we have to make sure the flow execution does not stop at the Action element. Drag a connection from the “Next” socket of the Action element to the “Prev” socket of the Return element to create an execution flow, indicated by the green color of the connection. Our function is now capable of returning something after the Action has been dealt with, but at this point in time, it will return an empty result, because we have not yet connected data to the Return element. In the same way the first connection was handled, create a connection between the elements “DataTarget” and “DataSource” sockets to create a data flow. When a node is being evaluated by the engine, it will try to consider connected data flows and make them available within its scripting context in the example of an Action or Return element.

) to create a new project. This creates a new entry in the table. The table lists properties of the new project including some system attributes like an

) to create a new project. This creates a new entry in the table. The table lists properties of the new project including some system attributes like an